Demo: Table Pick and Place

| Description |

A pick and place is a common task in manufacturing and production lines. In this demo the camera identifies objects and recognizes object bounding box. The robot's movements are planned accordingly.

The objective of this demo is to program robot to pick parts randomly placed on a table after preparing the training data and training.

This demo shows how to identify an object and determine its location and orientation.

|

| Step by step |

We first need to manually prepare training data, using approximately 60 images of an object. For each image, a bounding box of an object needs to be indicated. The images must then be split in to training and validation sets. The following steps are explained in subsequent sections:

|

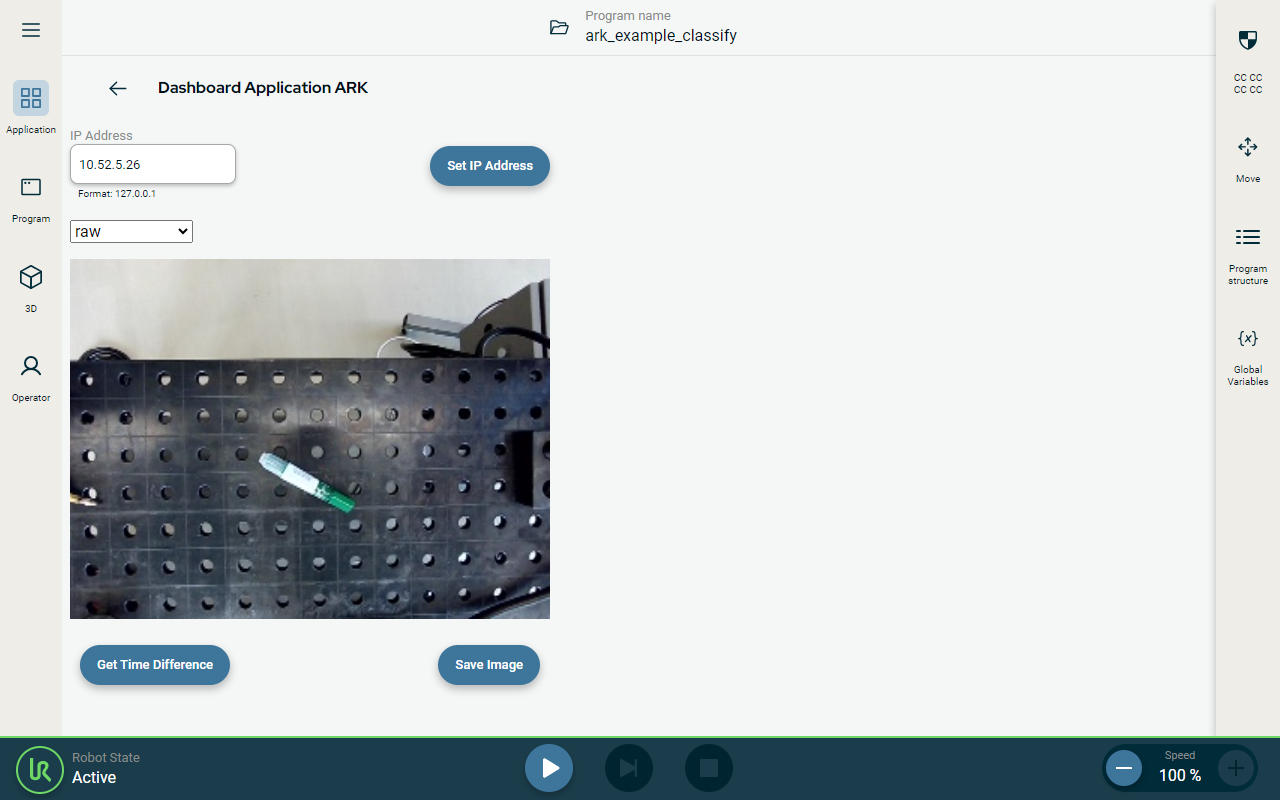

| To capture the images |

|

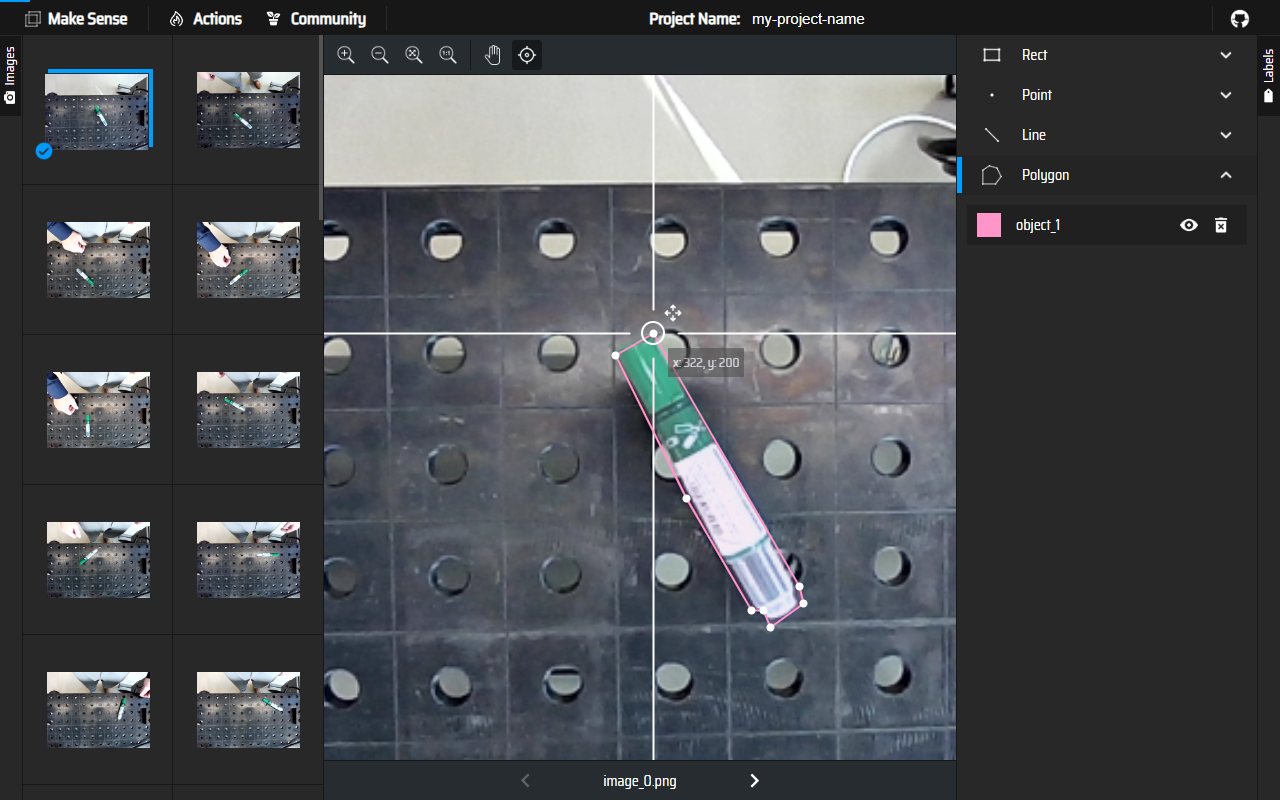

| To prepare the training set |

|

|

|

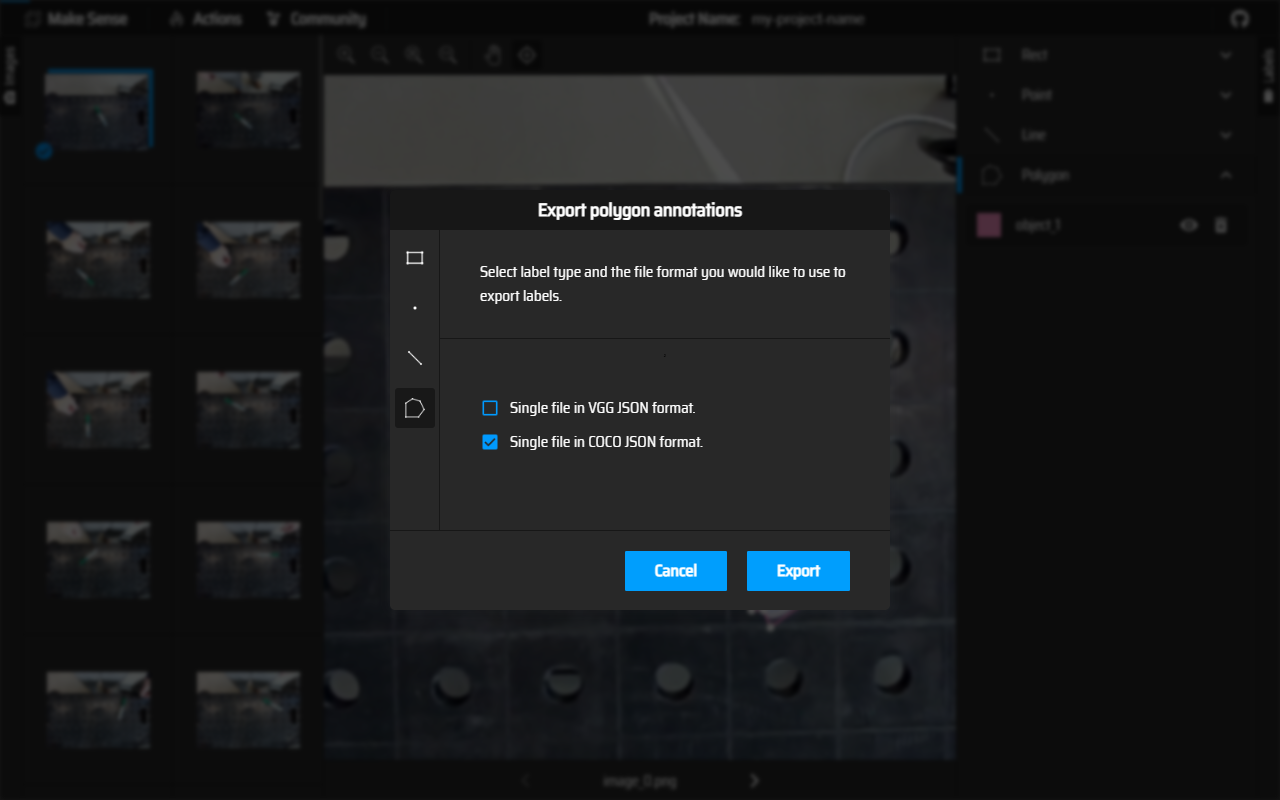

The training data consists of a combination of the JSON file and images.

|

| To prepare the validation set |

|

| To train the model |

The training of the model can take anything from several minutes, depending on the total number of images and labeled objects in each image. |

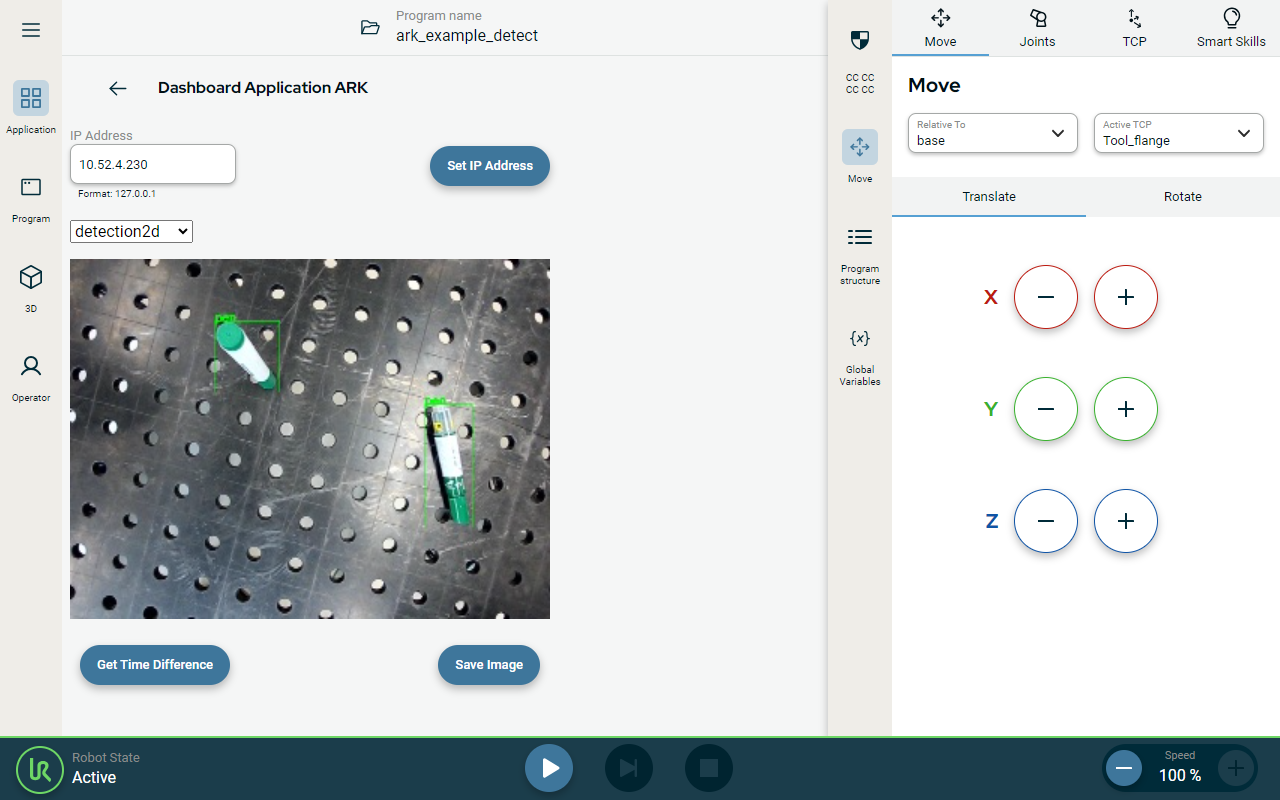

| Testing the model |

|

| Example of using object location |

A specific robot position stored in this program. Before executing this program check that robot can freely move to detect_wp and poses no risks.

Included withAI AcceleratorSDK you can find example of a robot program using the recognition results.

|